Introduction to Program Evaluation for Public Health Programs: A Self-Study Guide

On this Page

- Why Is It Important to Justify Conclusions?

- Analyzing and Synthesizing The Findings

- Setting Program Standards for Performance

- Interpreting the Findings and Making Judgments

- Tips To Remember When Interpreting Your Findings

- Illustrations from Cases

- Standards for Step 5: Justify Conclusions

- Checklist for Step 5: Justify Conclusions

- Worksheet 5 – Justify Conclusions

Step 5: Justify Conclusions

Whether your evaluation is conducted to show program effectiveness, help improve the program, or demonstrate accountability, you will need to analyze and interpret the evidence gathered in Step 4. Step 5 encompasses analyzing the evidence, making claims about the program based on the analysis, and justifying the claims by comparing the evidence against stakeholder values.

Why Is It Important to Justify Conclusions?

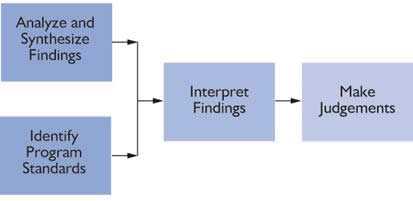

Why isn’t this step called analyze the data? Because as central as data analysis is to evaluation, evaluators know that the evidence gathered for an evaluation does not necessarily speak for itself. As the figure below notes, conclusions become justified when analyzed and synthesized findings (“the evidence”) are interpreted through the prism of values (standards that stakeholders bring, and then judged accordingly. Justification of conclusions is fundamental to utilization-focused evaluation. When agencies, communities, and other stakeholders agree that the conclusions are justified, they will be more inclined to use the evaluation results for program improvement.

The complicating factor, of course, is that different stakeholders may bring different and even contradictory standards and values to the table. As the old adage, “where you stand depends on where you sit.” Fortunately for those using the CDC Framework, the work of Step 5 benefits from the efforts of the previous steps: Differences in values and standards will have been identified during stakeholder engagement in Step 1. Those stakeholder perspectives will also have been reflected in the program description and evaluation focus.

Top of PageAnalyzing and Synthesizing The Findings

Data analysis is the process of organizing and classifying the information you have collected, tabulating it, summarizing it, comparing the results with other appropriate information, and presenting the results in an easily understandable manner. The five steps in data analysis and synthesis are straightforward:

- Enter the data into a database and check for errors. If you are using a surveillance system such as BRFSS or PRAMS, the data have already been checked, entered, and tabulated by those conducting the survey. If you are collecting data with your own instrument, you will need to select the computer program you will use to enter and analyze the data, and determine who will enter, check, tabulate, and analyze the data.

- Tabulate the data. The data need to be tabulated to provide information (such as a number or %) for each indicator. Some basic calculations include determining

- The number of participants

- The number of participants achieving the desired outcome

- The percentage of participants achieving the desired outcome.

- Analyze and stratify your data by various demographic variables of interest, such as participants’ race, sex, age, income level, or geographic location.

- Make comparisons. When examination of your program includes research as well as evaluation studies, use statistical tests to show differences between comparison and intervention groups, between geographic areas, or between the pre-intervention and post-intervention status of the target population.

- Present your data in a clear and understandable form. Data can be presented in tables, bar charts, pie charts, line graphs, and maps.

In evaluations that use multiple methods, evidence patterns are detected by isolating important findings (analysis) and combining different sources of information to reach a larger understanding (synthesis).

Setting Program Standards for Performance

Program standards not to be confused with the four evaluation standards discussed throughout this document—are the benchmarks used to judge program performance. They reflect stakeholders’ values about the program and are fundamental to sound evaluation. The program and its stakeholders must articulate and negotiate the values that will be used to consider a program successful, “adequate", or unsuccessful. Possible standards that might be used in determining these benchmarks are:

- Needs of participants

- Community values, expectations, and norms

- Program mission and objectives

- Program protocols and procedures

- Performance by similar programs

- Performance by a control or comparison group

- Resource efficiency

- Mandates, policies, regulations, and laws

- Judgments of participants, experts, and funders

- Institutional goals

- Social equity

- Human rights

When stakeholders disagree about standards/values, it may reflect differences about which outcomes are deemed most important. Or, stakeholders may agree on outcomes but disagree on the amount of progress on an outcome necessary to judge the program a success. This threshold for each indicator, sometimes called a “benchmark” or “performance indicator,” is often based on an expected change from a known baseline. For example, all CLPP stakeholders may agree that reduction in EBLL for program participants and provider participation in screening are key outcomes to judge the program a success. But, do they agree on how much of an EBLL decrease must be achieved for the program to be successful, or how many providers need to undertake screening for the program to be successful? In Step 5, you will negotiate consensus on these standards and compare your results with performance indicators to justify your conclusions about the program. Performance indicators should be achievable but challenging, and should consider the program’s stage of development, the logic model, and the stakeholders’ expectations. Identify and address differences in stakeholder values/standards early in the evaluation is helpful. If definition of performance standards is done while data are being collected or analyzed, the process can become acrimonious and adversarial.

Top of PageInterpreting the Findings and Making Judgments

Judgments are statements about a program’s merit, worth, or significance formed when you compare findings against one or more selected program standards. In forming judgments about a program:

- Multiple program standards can be applied

- Stakeholders may reach different or even conflicting judgments.

Conflicting claims about a program’s quality, value, or importance often indicate that stakeholders are using different program standards or values in making their judgments. This type of disagreement can prompt stakeholders to clarify their values and reach consensus on how the program should be judged.

Top of PageTips To Remember When Interpreting Your Findings

- Interpret evaluation results with the goals of your program in mind.

- Keep your audience in mind when preparing the report. What do they need and want to know?

- Consider the limitations of the evaluation:

- Possible biases

- Validity of results

- Reliability of results

- Are there alternative explanations for your results?

- How do your results compare with those of similar programs?

- Have the different data collection methods used to measure your progress shown similar results?

- Are your results consistent with theories supported by previous research?

- Are your results similar to what you expected? If not, why do you think they may be different?

Source: US Department of Health and Human Services. Introduction to program evaluation for comprehensive tobacco control programs. Atlanta, GA: US Department of Health and Human Services, Centers for Disease Control and Prevention, Office on Smoking and Health, November 2001.

Illustrations from Cases

Let’s use the affordable housing program to illustrate this chapter’s main points about the sources of stakeholder disagreements and how they may influence an evaluation. For example, the various stakeholders may disagree about the key outcomes for success. Maybe the organization’s staff, and even the family, deem the completion and sale of the house as most important. By contrast, the civic and community associations that sponsor houses and supply volunteers or the foundations that fund the organization’s infrastructure may demand that home ownership produce improvement in life outcomes, such as better jobs or academic performance. Even when stakeholders agree on the outcomes, they may disagree about the amount of progress to be made on these outcomes. For example, while churches may want to see improved life outcomes just for the individual families they sponsor, some foundations may be attracted to the program by the chance to change communities as a whole by changing the mix of renters and homeowners. As emphasized earlier, it is important to identify these values and disagreements about values early in the evaluation. That way, consensus can be negotiated so that program description and evaluation design and focus reflect the needs of the various stakeholders.

Top of PageStandards for Step 5: Justify Conclusions

| Standard | Questions |

|---|---|

| Utility | Have you carefully described the perspectives, procedures, and rationale used to interpret the findings? Have stakeholders considered different approaches for interpreting the findings? |

| Feasibility | Is the approach to analysis and interpretation appropriate to the level of expertise and resources? |

| Propriety | Have the standards and values of those less powerful or those most affected by the program been taken into account in determining standards for success? |

| Accuracy | Can you explicitly justify your conclusions? Are the conclusions fully understandable to stakeholders? |

Checklist for Step 5: Justify Conclusions

- Check data for errors.

- Consider issues of context when interpreting data.

- Assess results against available literature and results of similar programs.

- If multiple methods have been employed, compare different methods for consistency in findings.

- Consider alternative explanations.

- Use existing standards (e.g., Healthy People 2010 objectives)as a starting point for comparisons.

- Compare program outcomes with those of previous years.

- Compare actual with intended outcomes.

- Document potential biases.

- Examine the limitations of the evaluation.

Worksheet 5 – Justify Conclusions

| Question | Response | |

|---|---|---|

1 | Who will analyze the data (and who will coordinate this effort)? | |

2 | How will data be analyzed and displayed? | |

3 | Against what standards will you compare your interpretations in forming your judgments? | |

4 | Who will be involved in making interpretations and judgments and what process will be employed? | |

5 | How will you deal with conflicting interpretations and judgments? | |

6 | Are your results similar to what you expected? If not, why do you think they are different? | |

7 | Are there alternative explanations for your results? | |

8 | How do your results compare with those of similar programs? | |

9 | What are the limitations of your data analysis and interpretation process (e.g., potential biases, generalizability of results, reliability, validity)? | |

10 | If you used multiple indicators to answer the same evaluation question, did you get similar results? | |

11 | Will others interpret the findings in an appropriate manner? | |

Contact Evaluation Program

E-mail: cdceval@cdc.gov

- Page last reviewed: May 11, 2012

- Page last updated: May 11, 2012

- Content source:

ShareCompartir

ShareCompartir